This is the last day of our liveblog coverage of Kernel Recipes. Enjoy !

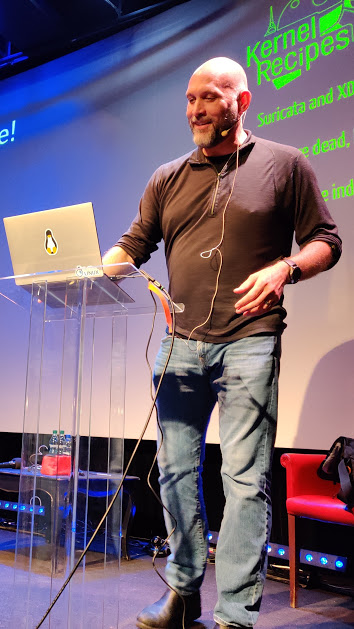

Suricata and XDP — Eric Leblond

Eric has been working with networking for quite a long time, but it didn’t help with his imposter syndrome, he says.

Suricata is an IDS/IPS: it analyzes network traffic from a probe and raises alerts. It has an advanced application layer analysis: it can do TCP payload re-assembly for example.

Suricata works as passive packet sniffer. It needs to work at the speed of the network, because it has no influence on network traffic. It must not miss packet, even under load. Eric says that at 3% packet loss, 10% of the alerts are missed; at 5.5% of loss, 50% of the alerts are missed.

It therefore needs a lot of CPU power, as well a proper way to load balance input network flows to CPUs: a scheduling issue can cause packet losses. There are multiple bypass strategies to mitigate that: local bypass works in userspace, but does not bypass the kernel. Capture bypass works before the netstack in the kernel.

Suricata also allows dropping traffic that is too intensive (e.g Netflix), or that it can’t understand (post-handshake TLS). The bypass implementation started with NFQ, then moved to AF_PACKET XDP and eBPF.

The eBPF bypass implementation relies on libbpf. Flow detection was based on an eBPF flow table, that would be managed periodically. Unfortunately, it was very slow because it was syscall (and CPU) intensive.

He then worked with a Netronome card, which is a card that can run XDP eBPF code directly. It handles the eBPF maps directly; but there were a few constraints related to the hardware that were dealt with. He even used Netronome RSS load balancing to distribute packets on the proper queue to share load; all done in eBPF code on the card.

Suricata startup is also challenging, when analysis starts in the middle of most network flows. To help with restart, eBPF maps would be pinned in order to keep the working in-memory data between Suricata sessions.

XDP is used in Suricata to do tunnel decapsulation (e.g GRE) by splitting the inner data, so that it can be load-balanced properly. Suricata does TLS handshake analysis, but wants to bypass the encrypted data. Initially this was done at the flow level, but it caused issues because there many small sessions. It was then done with XDP pattern matching.

AF_XDP was very interesting for the Suricata use case, because it provided faster analysis than AF_PACKET, bypassing the netstack’s skb creation for example. But Eric discovered that the hardware timestamp was missing with AF_XDP, which isn’t workable with Suricata that needs it for packet re-ordering between NICs.

Eric says that the situation has greatly improved since it started in 2015, with libbpf now being the standard tool to interact with eBPF, and it is shipping in most distributions. He wishes that there would be common libraries to share eBPF protocol decoding. Situation could also improve with shipping of eBPF files, that need to be compiled on the production system.

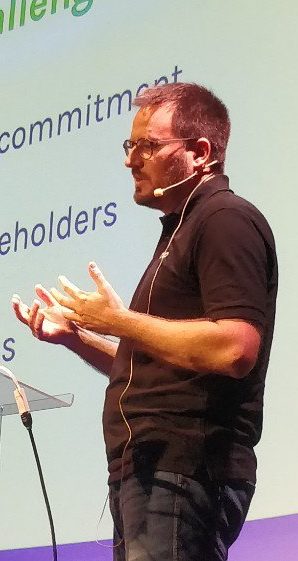

CVEs are dead, long live the CVE! — Greg Kroah-Hartman

Greg says that the 40 minutes rant in this talk is really his personal opinion only.

A CVE is a unique identifier for a security issue. It’s a dictionary, not a database. It started because there wasn’t any way to identify a particular issue; cgi plugins and zlib had a lot of issues that pushed for a way to identify them.

The CVE format is CVE-YEAR-NUMBER, like CVE-2019-12379, that is unique per issue. Multiple vendors can hand them out, but not the Linux kernel security team.

Then there is the NVD, a database of CVEs, that gives them scores. The issue is that it’s slow to update. The Chinese NVD (CNNVD) is faster to pick up CVEs, but doesn’t update them.

The issue with the NVD is that misses some issues. It is run and funded by the USA government, which might cause issues of conflict of interest, Greg says.

Greg says there is no mapping between CVEs and patches. Spectre has 1 CVE entry, but hundred of patches. Or sometimes, a single kernel patch have multiple CVEs. The NVD does not point to fixes.

An issue with CVEs that they are abused by developers to pad their resumes. Greg showed the example of CVE-2019-12379 that affected his code, that was just simply wrong: there was a patch to fix an issue in case of kmalloc failing. But it was later found by Ben Hutchings that the patch was worse than the initial behaviour. Also, despite the patch being reverted an never reaching a public kernel.org release, the CVE had to be disputed by Ben, with a Medium score or a non-exploitable issue.

Greg is also saying that sometimes it’s abused by Enterprise distribution engineers that only backport security fixes, to backport bug fixes instead that otherwise would pass through management.

Greg says that every week, there are lots of fixes that have security impact that aren’t attributed a CVE; anyone running a distribution kernel that only backports CVE fixes, is insecure to known issues.

He gave an example of a “popular phone”, running a Linux 4.14.85 kernel with a few backports. But since they are missing 1700+ patches, they missed 12 documented CVEs, as well as dozens of other security issues.

At Google, they found out that 92% of kernel security issues that were reported were already fixed in LTS kernels. That lead a change in policy to require updating the kernels from the LTS branch. They are still issues with out-of-tree code.

How to fix CVEs ? Greg says the best course of action is to ignore them. And build something new. It does need to have a distributed unique identifier. Greg says that upstream git sha1 commits ids already worked and are tracked.

Maybe they need better marketing ? Greg proposes to call those Change IDs: CID. The format would be CID-[12 digit hex numbers]. For example CID-7caac62ed598. Rebranding with CID identification is the way forward for open projects according to Greg.

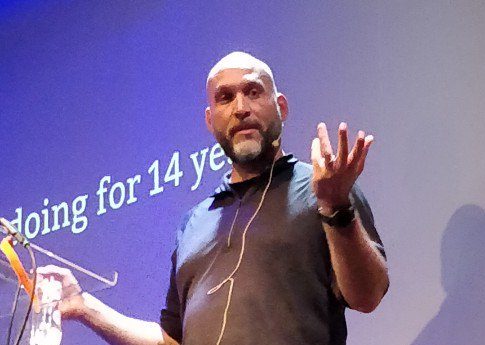

Driving the industry toward upstream first — Enric Balletbo i Serra

Enric is a contributor to Chrome OS ARM platforms as part of his work at Collabora.

His dream is that one time a SoC vendors would not give him a BSP, and instead point to upstream Linux or u-boot as the code to use.

It’s easier to work behind closed doors, Enric says. Working upstream takes time and patience. But it also brings higher quality and less maintenance.

Chrome OS is the Linux-based OS inside every chromebooks. Security is important for Chrome OS, Enric says: they are supported for 6 years, and updated every 6 weeks. Within these constraints, it’s impossible to have a different kernel for every device. Right now, it works by using 5 to 6 LTS branches per hardware family, and attempting to update to a new LTS at least once during the lifetime of a device.

Chrome OS development happens in the open, unlike Android, Enric says. To help with development, kernel commits are tagged to identify upstream, backported, or chromium-specific code. A lot of commits are backported to the chromium kernel branches.

A requirements for hardware vendors with Chrome OS is to work with upstream. Enric showed an example with Mediatek and Rockchip that have started sending patches upstream for chromebook support.

In chromebooks, there is a CrOS-specific Embedded Controller (EC); its upstream support was incomplete, but Enric has been working on improving it. He worked by getting the patches from CrOS branches, squashing and splitting them before submission.

Sometimes, it would be accepted, but others it had to be reworked: for example, the use of the MFD (multi-function devices) subsystem was being abused. Now, most of the CrOS EC drivers are upstream. New related drivers will go through upstream first.

What’s different from mainline in CrOS kernel code ? Mostly gpu and network drivers. The rest is part of a long tail. For net, it’s because of a lot of the code was backported. For gpus, the midgard driver patches is the biggest source of patches. It’s because when it was released, no alternative existed. But now, the panfrost driver for the same mali GPUs is upstream.

Enric showed a demo with a Samsung Chromebook Plus with a Rockchip SoC and an upstream Debian distribution. A lot of hardware is already supported, and the GPU as well.

That’s it for this morning ! Continue with the afternoon liveblog.

Trackbacks/Pingbacks