QEMU in UEFI — Alexander Graf

Running x86 servers is usually easy, and ARM servers found quite a challenge in the beginning when trying to run x86 code.

ARM and x86 differ with their register sets, their instruction sets, and aren’t compatible at all. That’s where QEMU comes in: it can emulate another CPU and allow you to run code compiled for another hardware. It also emulates all the hardware in a computer.

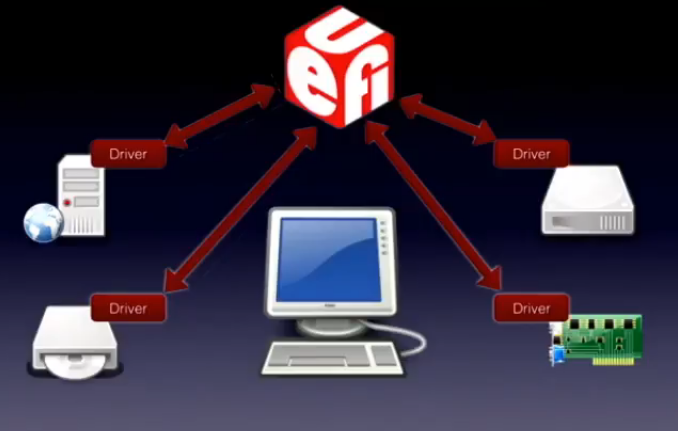

In UEFI land, you have drivers like in Linux, that give access to the hardware. They let you access network cards, disks, etc. Alexander says the UEFI driver interface isn’t very well designed to separate virtual interfaces from hardware interfaces.

Alexander says that data structures in aarch64 (the 64 bits ARM architecture) are compatible to the ones in x86_64 servers: pointer size, padding and endianness are the same. But how does one call ARM UEFI function pointers from an x86 OS in QEMU ?

Alexander says that data structures in aarch64 (the 64 bits ARM architecture) are compatible to the ones in x86_64 servers: pointer size, padding and endianness are the same. But how does one call ARM UEFI function pointers from an x86 OS in QEMU ?

The trick is to use the NX (no execute) feature for the regions that might contain function pointers. Any jump to such a region would generate a trap, that can call the appropriate QEMU code that can translate the call ABI to call the proper function. And this works in both directions. This is emulation on function level boundary.

In QEMU, there’s a code base called TCG (Tiny Code Generator), in LGPL, that can do on-the fly JIT instruction set translation, and support x86_64. Alexander says he didn’t find any other projects that did the same.

Alexander showed a demo of an emulated arm64 hardware, running UEFI, that loaded an x86 ethernet driver, to run an x86 OS loaded over the network.

He said that once the emulator hooks get merged in the next EDK, this type of emulation might start to appear in several ARM servers. In a response to the audience, he said that it will always better to have native code drivers, but having a backup solution is always useful.

Is Video4linux ready for all cutting-edge hardware ? — Ezequiel Garcia

No. Is the executive summary, according to Ezequiel.

V4L2 has relatively simple userspace API, he says. You configure the device, then request buffers.

Codecs are supported in the kernel, with the memory-to-memory APIs. Stateful codecs didn’t have an interface specifications. The issue is that all drivers handle things slightly differently.

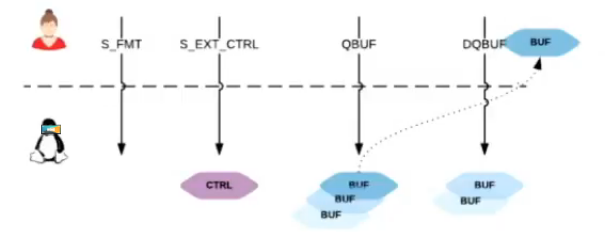

Stateless codecs relies on more work from the application to do extra parsing, and setup the hardware properly. In this case, the stream-based API isn’t sufficient. For these drivers as well, the specification is a work in progress. The issue with the streaming API, is that there’s no correlation between the controls and the payload you send and get. With the request API, this was fixed, and buffer and control metada can be synchronized. It’s on the verge on being merged, and took four years of discussions, with many developers.

Stateless codecs relies on more work from the application to do extra parsing, and setup the hardware properly. In this case, the stream-based API isn’t sufficient. For these drivers as well, the specification is a work in progress. The issue with the streaming API, is that there’s no correlation between the controls and the payload you send and get. With the request API, this was fixed, and buffer and control metada can be synchronized. It’s on the verge on being merged, and took four years of discussions, with many developers.

DMA Fences are a future area of evolution in V4L2, to provide explicit synchronization to buffers. It reduces the number of buffers to fill the pipeline, since they can go directly from V4L to DRM, without passing through userspace.

Asynchronous UVC should be reworked by Kieran Bingham to improve USB packate handling in multi-core SoCs.

Packet probes and eBPF filtering in Skydive — Nicolas Planel

Skydive is network topology and protocol analyzer with support for software-defined-networks. It provides a nice interface to visualize network topology, flows, routing tables, etc.

It helps with troubleshooting, flow monitoring, validation. Its dynamic UI uses d3.js with a physics feeling. You can request information on the graph with the Gremlin language. Gremlin is a graph engine language, with a Skydive implementation (not using tinkerpop).

In order to fetch information, Skydive relies on multiple topology probes: netlink, netns, ovs, docker, kubernetes, neutron, socketinfo, etc.

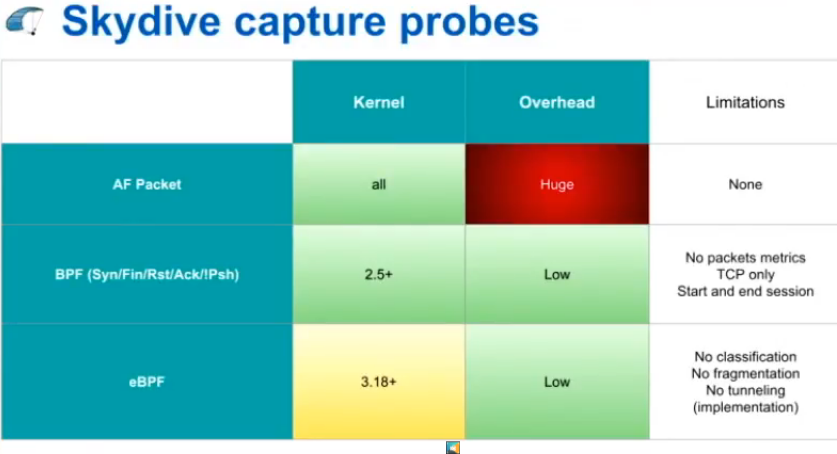

The eBPF probes are fast, and run in O(1), Nicolas says. They have user space part (in Skydive), and a kernel space part. The userspace part matches the flows to the topology nodes. Skydive also matches the socket information to processes using kprobes and eBPF.

The roadmap include future hybrid capture, with the use of eBPF dissectors. Augmenting the information on local processes, like retransmission counters with eBPF and kprobes is also planned. Extracting the graph engine (Steffi) for outside use is also planned.

Overview of SD/eMMC, their high speed modes and Linux support — Grégory Clément

SD cards date back to 1999, then were extended with MMCs. They have 9 pins, and can work in SPI mode. The SD Bus protocol is composed of a command and a data stream. The transaction model is command/response, with all commands initiated by the host.

MMC evolved and diverged from the SD standard, with extensions from MMCA and JDEC. eMMC appeared in 2007, and is the embedded on-board version, designed with BGA chips in mind. eMMCs are now widely used.

MMC evolved and diverged from the SD standard, with extensions from MMCA and JDEC. eMMC appeared in 2007, and is the embedded on-board version, designed with BGA chips in mind. eMMCs are now widely used.

Support in Linux for MMCs started in 2.6.9; SD card support was added in 2.6.14, and SDHCI arrived in 2.6.17. The first high speed mode for MMC and SD (with a clock up to 52MHz) was added in 2.6.20. SDIO was added in 2.6.24. The code is split between core and host parts, with the later being more hardware-specific.

New speed modes were added over time to be able to raise the base clock speed, via the CMD6 command. Starting with UHS-1, the new modes work at 1.8V instead of 3.3V, so voltage switching is required.

In MMC, the DDR mode, then HS-200 speed improvements were added in 2009 and 2011. Then the HS-400 in 2013, which works at 200MHz with a dual data rate and new enhanced data strobe line. The SD card also proposed new speed modes with UHS-II in SD 4.1, with a completely new set of signals.

Currently, eMMC speed mode is quite complete in Linux, with most development focusing on hardware-specific code. UHD-II and UHS-III aren’t supported in Linux, but Grégory says no ARM SoC is supporting them either.

In June 2018, the SD Association announced a new improvement, called SD Express, based on PCIe and NVMe, which should be well supported in Linux.

That’s it for Kernel Recipes 2018 ! Thanks for following the liveblog for this 7th edition and see you next year !