Welcome to the Kernel Recipes Liveblog ! This follows yesterday afternoon’s liveblog.

Atomic explosion: evolution of relaxed concurrency primitives — Will Deacon

Will is the co-maintainer of the arm64 architecture, and recently got involved on the concurrency primitives in the arm kernel. He started by saying he doesn’t really like concurrency, since it’s often not synonym of performance.

There are many low-level concurrency primitives in the kernel, but he started with describing atomics, operations that are guaranteed to be indivisible. Core code provides a C implementation that is generic with lock primitives. But Will says you don’t want to look at this code, nor use it, for performance reasons.

Historically, atomic_t isn’t well defined, separate from cmpxchg, and specific per-architecture. He’s recently been working or improving this. For example, core code will generate code the architectures don’t provide, using the lower-level primitives that are available.

The new _relaxed semantic extension allows having unordered code, that the compiler can reorder. But, while it’s very useful, he says it’s still under-used, which is how he’s presenting today, so people can understand the new semantics.

There’s also a the fully-ordered smp_mb() atomic_t extension, that works across all CPUs, that is very useful, but quite expensive to use, on all available architectures.

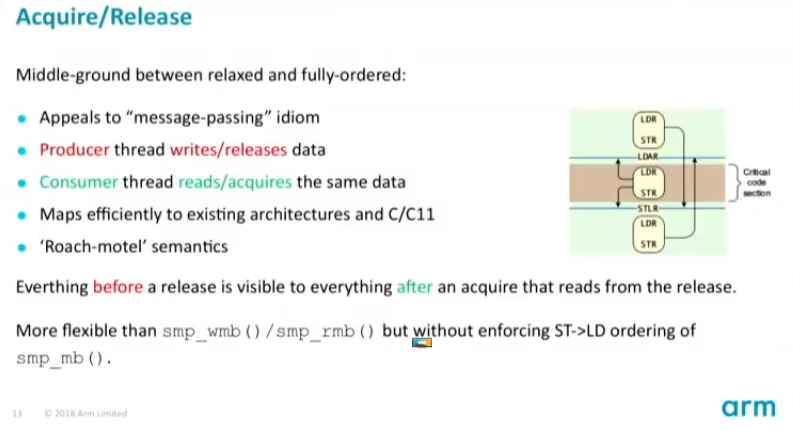

Acquire/release is a middle ground between relaxed and full-ordered; they can be chained together without loss of cumulativity.

Depending on the architecture, the atomic acquire or release might be identical; but this granularity allows architecture that support some semantics to implement and use them.

Generic locking

The Linux kernel has generic implementations of many locking primitives in kernel/locking/*. These are very useful, portable, and can be formally verified. But are there performant enough ?

He started by looking at qrwlock, which uses architecture-specific optimization for the rwlock semantics; qrwlock has a faster write performance, but lower read rates than rwlock.

qspinlock is also a generic implementation relying on very simple architecture locking primitives, and is very performant. Will says that it’s as simple to enable for an architecture as enabling it in Kconfig. Of course, you must first verify that your memory model matches what is expected by this algorithm. He was a bit hesitant to enable it at first, because he wasn’t sure it would work as expected.

So he started looking at verification tools, and testing. tools/memory-model for example, and other formal modeling tools like TLA+, a formal specification language, or testing with herdtools. But this is still quite complex, as “people are getting PhDs out of this”. It’s slow, but it will get there.

He’s also encouraged the audience to review attentively code using atomics and other generic locking primitives, and not hesitate to Cc: him and other atomic maintainers for help in the reviewing process.

Coccinelle: 10 years of automated evolution in the Linux kernel — Julia Lawall

The Linux kernel is a large codebase, that is still rapidly evolving. But it poses an issue on how to do at-scale evolution of kernel code. A simple idea is to raise the level of abstraction to write semantic patches. That’s were Coccinelle comes in with it’s SmPL Semantic Patch Language, and tool to apply these modifications. It was first released in 2008.

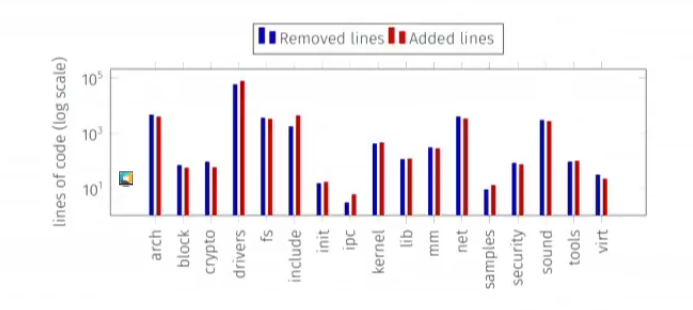

There were over 5500 commits in that period of time. It started originally with just Coccinelle developers, and then started to be used by other kernel developers, including recently outreachy interns. Julia noted that 44% of the 88 kernel developers that have a patch that touches at least 100 files have at least one commit that uses Coccinelle.

There were over 5500 commits in that period of time. It started originally with just Coccinelle developers, and then started to be used by other kernel developers, including recently outreachy interns. Julia noted that 44% of the 88 kernel developers that have a patch that touches at least 100 files have at least one commit that uses Coccinelle.

The original idea was to provide as simple as possible expressivity, so that it’s easy and convenient to use. But real world constraints like language evolution also pushed them to add new features: position variables, and script language rules. Script language rules can for example print a message based on Coccinelle input (including position variables for the line of the code). Many (43%) patches made use of these new features, as well as all semantic scripts present in the Linux source tree.

An original design goal of Coccinnelle was performance: it only parses .c files, works only inside a function, doesn’t include headers by default, or expand macro. But the real world constraints showed that even with this, parsing 1M, or 15M lines of code is still very slow. Parsing is slow because of backtracking heuristics. They added parallelism with parmap, and pre-indexing to improve on this. Running Coccinelle with the 59 semantic patches present in the Linux kernel takes about the same time as building the full kernel, Julia says.

Design also included correctness guarantees at its core. It’s been proven to be pretty successful in that regard, as long as developers write correct semantic patches.

The strategy used by the Coccinelle team to improve their visibility was to show by example how it could be used, by solving real world issues the Linux kernel developers encountered. They found parsing bugs in unreachable code (hidden behind ifdefs), fixed many issues at refactoring points like irq, etc. This showed initial interest, and it was presented at many conferences (including Kernel Recipes !).

It’s impact has reached all over the kernel; and it has been used for both cleanups and bug fixes. Julia showed how maintainers really took ownership of the tool, writing very long semantic patches without help from the Coccinelle team.

JMake is a tool built on top of Coccinelle to help verify semantic patches by making sure it builds the lines that are changed with the proper configuration. Prequel is another tool that was built on top, that helps searching the git commit history with a semantic patch, ordering them from most pertinent to less. Prequel is a frontend to Coccinelle, and Steven from the audience said it might be very useful to have git integration for this tool.

In conclusion, Julia said that the tool’s initial decision are still valid, with some extensions. They kept the core, without feature creep, and had a wide impact on code everyone is running.

TPM enabling the Crypto ecosystem for enhanced security — James Bottomley

Everybody needs help protectling secrets, James started. A secret in this case might be RSA/ECC keys for identity for example. These keys, when used as identity representative, need to be protected as best as possible. Which means using a Hardware Security Module (HSM) USB key. The issue is that most of those can only carry one key.

One key is far from enough: James says he is currently using 12. A TPM can help scale beyond these HSMs limitations. TPMs are chips with shielded memory, that are now ubiquitous and present in most laptops. Unfortunately, they have a very bad programming experience. They use the TSS model, which is very complex.

Linux TSS 1.2 is badly designed with tcsd being a single daemon that is shared between all users. For TPM 2.0, it can be improved upon.

TPMs can do many things: attestation, data sealing, etc. But James focuses on Key Shielding, which key storage in the TPM. In TPM 2.0, you have algorithm agility, while TPM 1.2 is limited to sha1/rsa2048. TPM 2.0 chips usually support a few elliptic curves algorithms and sha2, the latter being a much better choice as a hash function.

TPM 2.0 generates keys from random numbers with key derivation functions; RSA keys are very slow to be generated though. James showed that it took 43s on his laptop to generate a key. It’s much faster for elliptic curves, or on last generations TPMs.

You keep the seed, and only the TPM inside a device will be able to generate a key. Which means that a stolen seed is unusable without the TPM inside the laptop. Since it can never be extracted, it means that you can’t really tie your identity to the lifetime of the device. If you store a key inside a TPM, you need a way to find the original back, and another way to convert it in form usable for a new TPM.

James is very critical of the TCG: the TPM 2.0 is still not complete, even if there already devices shipping.

James is very critical of the TCG: the TPM 2.0 is still not complete, even if there already devices shipping.

Support is improving in Linux, with TPM 2.0 resource manager support landed in 4.12.

James says that the IBM TSS (Trusted Secure Stack) has been available for a long time and can be used to established an encrypted session from your application, directly to the TPM2 device. It’s simpler to use, and based on TPM2 commands. James says you can build a secure crypto system with only 5 commands. It’s possible to generate the key directly on the TPM, but since the Infineon prime bug, most people don’t trust TPMs anymore, and generate them outside the TPMs before importing them. The keys aren’t really stored inside the TPM, but TPM provides an encrypted blob, which the app must store, and reload when it needs to use. There are other functions for signing, and rsa/ecdh decrypt.

Since there’s a lot of sensitive information in the commands, there must be a secure channel to the TPM, with the ESAPI, an encrypt session api. This ensures nothing between the TPM and the app can get the data that is exchanged. When the app is the kernel (for random number generation or storage), direct snooping on the bus is potential attack model thwarted by ESAPI.

An issue with the ESAPI is that only the first parameter (!) is encrypted. So they had to add new commands to take this into account with double-encryption of the other parameters.

A disadvantage of TPMs is that keys are tied to a single physical laptop, and need to be re-converted when you change laptops. You also need a kernel more recent than 4.12 for TPM 2.0.

Integration with OpenSSL with an external engine is progressing well on James’ side, and works well. An issue is that not all Elliptic Curves are supported, the only two mandated being BN-256 and P-256. Curve 25519 is not even on the TCG radar. It’s possible to load openssl, openvpn, openssh, and more recently gnupg keys. James showed a demo of generating and loading gpg key into a TPM, and then protecting a new subkey with the TPM.

James says this works really well and is integrated with many software projects.

That’s it for this morning ! Continue with the afternoon liveblog !