Welcome back to the liveblog, following-up on this mornings’s live blog.

We start the afternoon with the charity auction, benefiting “Les Restos du Cœur”, a charity serving meals to starving people. A particularity this year is that the auction will happen in meals, not € (1 meal = 1€).

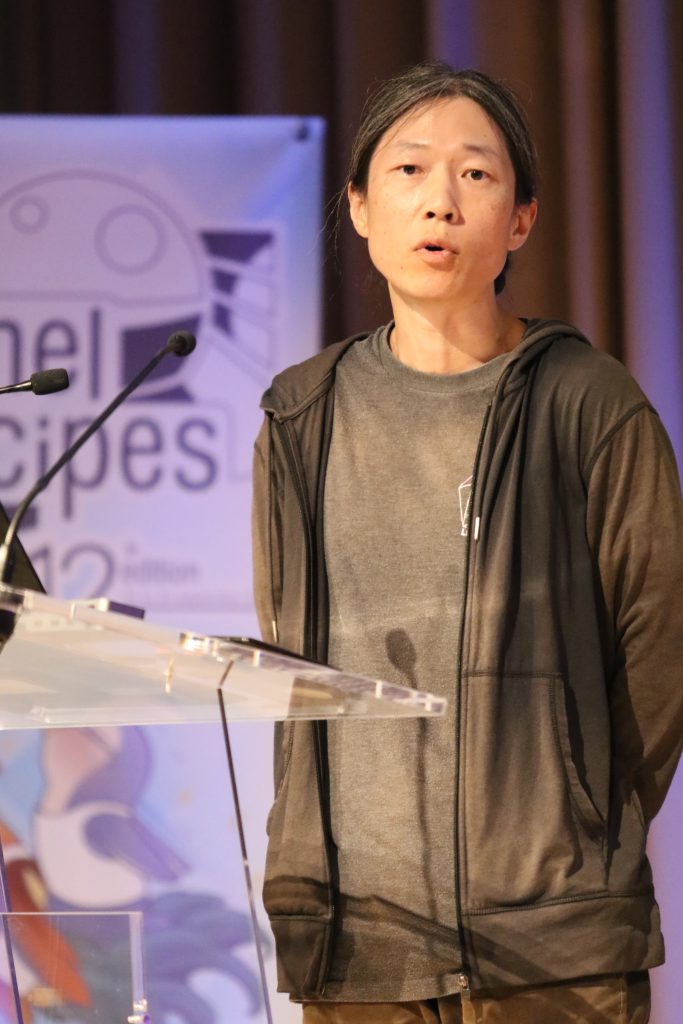

Overcoming Observer Effects in Memory Management with DAMON — SJ Park

SJ is the DAMON in-kernel monitor maintainer. The presentation starts with a user story of a team at a Cloud provider trying to reduce the allocated memory for VMs. But it found a major challenge in accounting the real memory requirements: their approach had a lot overhead and accuracy issues. At the same time, a kernel developer was looking for user for their new kernel feature, DAMON. It ended up working properly, with auto-scaling memory service working properly and shipping in an AWS product.

SJ is the DAMON in-kernel monitor maintainer. The presentation starts with a user story of a team at a Cloud provider trying to reduce the allocated memory for VMs. But it found a major challenge in accounting the real memory requirements: their approach had a lot overhead and accuracy issues. At the same time, a kernel developer was looking for user for their new kernel feature, DAMON. It ended up working properly, with auto-scaling memory service working properly and shipping in an AWS product.

The goal of computers is to process data, SJ says. The memory is the medium for storing data. But the data created has been exploding. Reality isn’t a Turing machine with infinite memory, and has to follow the laws of physics. The usual solution to those limits is to create a hierarchical memory system that follows hardware limitations: from CPU caches, to DRAM, to SSD and HDD for frozen data. Software is then optimized for hierarchical memory management.

An issue is that data access monitoring triggers observer effects. So in practice we compromise with a high-level view. A solution that is non-ideal, but practical. DAMON is one such approach focusing on a general view.

The goal of DAMON is have a space-time view, or something that approaches it. This generates Space Overhead (memory usage), and Time Overhead (time to generate a snapshot). To prevent those contention, DAMON first uses regions to split memory into reasonably atomic units of granularity; it also reduces the monitoring polling. This allows controlling both space overhead (memory) and time overhead (computing).

The size of a region is dynamically auto-tuned, and wasteful regions are merged, and others are randomly split. The user only controls the min and max number of region. Accuracy is also automatic. The time granularity is also dynamic.

The output of DAMON needs to take care about having the proper sampling interval to prevent having too little or too much information. It is controlled by user asking the minimum and maximum sampling intervals and desired amount of events, then having a feedback loop to auto-tune.

SJ admits, that setting the auto-tuning parameters is yet “another heuristic”; but the maintainer is available to help maintain and debug the recommendations. DAMON can be used for profiling (room for improvement, capacity planning), PGO (Profile-guided Optimizations).

DAMON is used in AWS Aurora Serverless 2. It can be used for memory tiering (with CXL) to migrate hot data in slower NUMA nodes to faster nodes. SK Hynix and Meta are both using this for example. An extension for cgroup-awareness is in review. Page-level data access monitoring was developed by Meta for page access pattern profiling. Meta also deployed fleet-wide data access monitoring.

DAMON was merged upstream in Linux 5.15, and SJ recommends using the latest kernel. Userspace tools have been packaged in most distros.

Improving Linux for (KVM) Real time Workloads — Leonardo Brás Soares Passos

Leonardo used to work in the Virt-RT team at Red Hat, and this is the work he’ll be presenting.

Leonardo starts by explaining what is Real-Time (RT): “I need you to deal with my request within T time, or else…”. So making sure deadlines are guaranteed, or what Leonardo calls the Maximum acceptable “Response time” by a given workload. The type of Real-Time system changes depending on the impact of missing a deadline: in a hard real time it’s a critical system failure, in a soft real time it just degrades the quality of the service.

Real-Time used to be done on baremetal to avoid the overhead of an OS. But having an OS is really nice, so why not have one focused on RT? Developing on Linux is really nice, so people want to do that. This is why PREEMPT_RT (and others before) was developed, in order to add deterministic behavior to an OS that was initially optimized for throughput instead of latency.

A scheduling policy is needed to be able to run a “real time” task first. There are three RT scheduling policies in linux: SCHED_FIFO, SCHED_RR and SCHED_DEADLINE. Each one has a different policy: FIFO runs tasks of same priority to completion in the same priority level, RR uses round-robin amongst tasks of the same priority level, and DEADLINE runs the tasks with the first deadline first.

PREEMPT_RT makes the kernel yield time to real time tasks. It does that in multiple ways. For example, it replaces locking primitives with ones that are preemptable and priority-inversion aware.

CPU isolation allows, from boot parameter isolcpus, to block Isolated CPUs to have no tasks running on them, except what the user controls; it reduces workload interruption from “housekeeping” kernel code. If you have single task pinned per CPU, RT is “solved” since you don’t have any competing task.

There are a few tests to evaluate the kernel for RT- compatibility: cyclictest for example verifies predictability of wake-up after a sleep. oslat verifies maximum interrupt time for userspace by reading time in a loop.

Real Time on Virtual Machines

KVM guests are running on isolated CPUs, and vCPUs are pinned to physical cores. Both guests and hosts are running PREEMPT_RT kernels. Leonardo says that it might be needed for the same reasons we want VMs.

It does have drawbacks: guest_exit and guest_entry both add latency. Network latency needs to be taken into account.

Leonardo worked on adding tracepoints, avoiding isolcpu for workqueues, improving RCU behaviour for this usecase, and change the local_lock strategy to reduce the number of Inter-Process Interrupts (IPI) for Isolated CPUs.

Program Verification for the Linux Kernel: potential costs and benefits — Julia Lawall

The Linux Kernel seems reliable, but actually it is full of bugs. Some fit well-known patterns, and can be detected with runtime or static checks. Other need formal verification.

Formal verification needs a specification, and sometimes just thinking about this verification is enough to find bugs. But creating this specification is often very hard, Julia says. And it can even be wrong, from the start, or by becoming obsolete.

This talk is just a thought experiment, Julia says, to see what can be done in this direction.

Julia shows a simple swap C function, and exposes properties we might wish to verify. For this example, the tool to use will be Frama-C. It requires annotating the code with Pre- and Post- conditions to describe the constraints. An SMT solver is then ran to verify whether those are satisfied or not. For the swap example, pre-conditions are that pointers are valid. The post-conditions are that the new *p value is the same as the old *q value, and vice-versa. A comment is added on top of the function with those constraints, then running the frama-c cli will verify the function.

The goal is to apply all of this to the Linux scheduler. Julia says it comes from a frustration of not being able to understand what the scheduler is doing. The approach is to take a simple function, and prove it with Frama C with dummy definitions for any C external functions. This approach ignores concurrency or hidden memory issues as a first step.

A first case study is the should_we_balance function that decides whether tasks should be stolen during load balancing. It was added to the kernel in 2013, with an incorrect patch, then fixed before release in Linux v3.12. There has been 10 variants over time, with several recent optimizations. It was chosen mostly as an example.

The function loops of CPUs, and elects one to steal, by searching for an idle one, then returns if the first one it found is different from the one current one. Julia wrote a pre- and post- conditions in Frama-C for the initial, buggy version of the function. (Julia says that maybe someone should hire verification experts to write those constraints.) On a test machine, Frama-C proved the function under one minute.

Julia asks what should be done as the code changes: should the constraints be updated? For optimizations, no, because the input-output behaviour should not change. Ditto for comments, or code reorganization. Some code changes will require simple proof changes. But sometimes simple algorithm change will have a much bigger impact on the proof, like adding a huge state machine in the specification.

While it can be very hard to prove (it took 3 months in one case), it found a bug in the code in the latest code, that was then fixed upstream.

Complexities in the code are magnified in the specifications, exploding the proof time and effort. Some tools to isolate relevant code might help. Tools to facilitate writing specifications as well. Or tools to help react to code changes. The code samples from this presentation are available.

This live blog is over for today, continue reading on day 3.

0 Comments