Welcome back to the live blog! This continues from yesterday’s live blog.

Recipe for baking a GCC — Thomas Schwinge

Thomas is a GCC developer, but has also contributed about 20 lines of code to the Linux kernel. He has been contributing to GCC for 17 years. He started using Linux version 2.0.36, and developed open source software since 2001. He joined BayLibre when many of ex-CodeSourcery joined the company to create new compiler services business unit.

Apparently, GCC is still used by a majority of the audience when building Linux after a question Thomas asked. GCC started in 1987, and is still going strong, with one major release per year, and multiple minor releases per year for release branches, in which patches are cherry-picked from the main branch. GCC has about 650 commits and 100 monthly contributors.

Red Hat and Adacore are the two top companies employing people to contribute to GCC 15. There are many others well known companies, including Thomas’ employer.

GCC has been developed in Git for a few years now, and the full history has been converted. In the source tree, there also multiple imported or vendored libraries.

When built, GCC generates multiple binaries: the “drivers” like gcc, g++, gfortran, etc; and then the actual compilers: cc1, cc1plus, f95, etc. There is linker interfacing and libraries like libgcc, libstdc++, libasan, etc. Separate teams and source trees operate the binutils (assembler, linker, etc) , glibc or gdb.

Internally, GCC support multiple languages, which are converted into ASTs by the language front ends, then into intermediate representations like GENERIC, GIMPLE, RTL. In theory those are supposed to be lan

guage-agnostic (and at high/mid level target-agnostic), but this does not always work, since there are always language-specific details (C++ throw/catch?) or target-specific ones (how big is an int?).

Since 2001, it’s no longer mandatory to assign copyright to the FSF, and the DCO (developer Signed-off-by) is required instead. The project is lead by the GCC Steering committee, although it tries to stay out of day-to-day development. The contributors do the actual work: from “random contributors”, to write-access, to maintainers and reviewers.

The GCC community is a bit wider, and it has ties to language committees, downstreams (distributions…), student and research projects, etc.

Contribution uses an email-based workflow; but there is an experimental Pull Request-based workflow being tested. Then there are sub-projects that have their own infrastructure (CIs, or different chat boards). Every year, developers meet for GNU Tools Cauldron, an annual toolchain community meeting, the upcoming one being just next week. There is also a FOSDEM devroom since 2024.

There are two efforts to be able to compile Rust with GCC: in upstream rust, rust_codegen_gcc is a backend for rustc that uses libgccjit for AOT(Ahead of Time) code generation. Another effort is gccrs, in upstream GCC, that aims to provide a frontend for Rust directly in the GCC source tree. The later has been making a lot of progress over the years. It should be able to compile Rust 1.49’s core library this year.

There is also a project to add BPF support in GCC, maintained by Jose E Marchesi and other Oracle colleagues. Thomas made a demo compiling a bpf program and loading it in QEMU VM’s kernel; in one case the kernel bpf verifier correctly rejected a bad program, and then accepted another, correct program which traced the openat syscall. All this was part of GCC’s testsuite to make sure both cases keep behaving as expected.

The CRA and what it means for us — Greg Kroah-Hartman

Greg never expected to be talking with lawyer as a developer, but “here I am”, he said. This talk represents his personal opinion, and “us” means Open Source developers in this context.

Cyber Resilience Act (CRA) defines Product with Digital Elements (PDE) as any product thas has software in it. CRA pushes list of software “ingredients” in a device, and whether those are “safe”. It’s generally a good thing, Greg says.

This EU regulations impacts everyone that might sell a product (PDE) in the EU, even over the Internet, so it affects everyone, Greg says. It has exceptions for Open Source, but it does not relieve from all responsibilities. Web and Cloud services had a carveout, but laptops or Chromebooks are included, Greg says.

Market surveillance and enforcement is done by country-specific CSIRTs (Cyper Security Incident Response Teams), and ENISA (European Union Agency for Cybersecurity); people are talking to each other, so this is good, Greg says. The CRA pushes requirements for cybersecurity requirements for PDE life cycle; there must be vulnerability reporting and handling. Open Source projects are not required to fix issues reported by companies. It will happen anyway, and Greg already has form letters for refusing them.

There are requirements for having Software Bill of Materials (SBOMs) for PDEs, and it will be good for open source. Different “types” of products classifications exist: Default, Level 1, Level 2 and Critical.

Automobile, medical, aeronautical, marine are not covered, like SaaS. Hobbyist open source projects are not included, until they become included in a commercial projects.

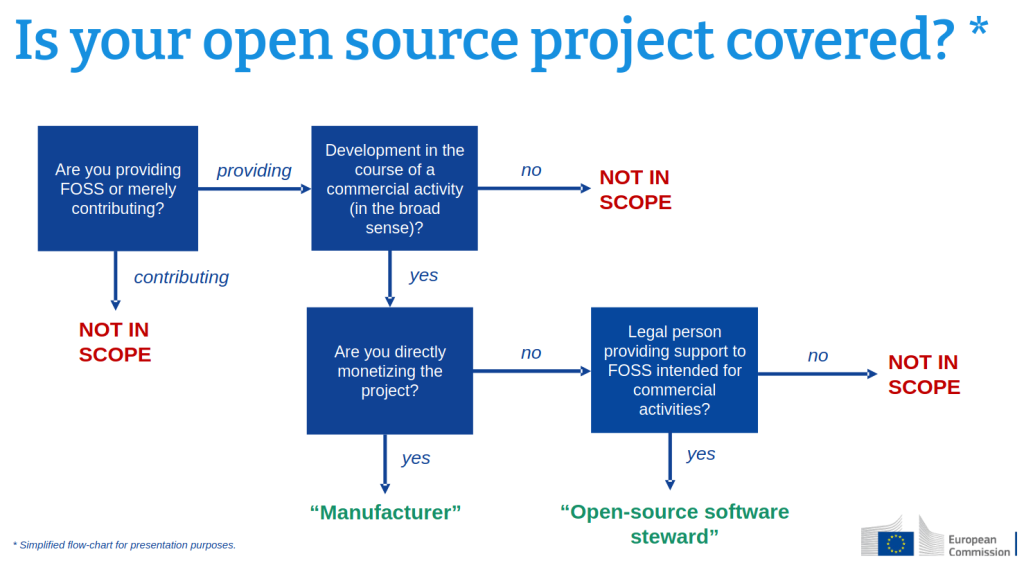

If an open source project is being monetized, then it’s covered as a Manufacturer; legal persons or foundations are covered as “OSS Steward”. There is currently a disagreement on what exactly “legal person” means.

A Open source stewards’s only responsibility is to provide a contact for security issues, and publicly report integrated security fixes; and that’s it. Providing fixes is not required though. Greg says you should already be doing this: providing a security.txt, becoming a CNA. OSSF working with Greg came up with Stewards Obligations Checklist.

“It’s going to get worse”: companies asking open source projects to do their work for them, like Emerson did.

CRA is coming soon for companies: in September 2026, companies will have to care. It will come a year later for OSS Stewards. We will know in June 2026 what enforcement will look like.

Greg argues that the requirements for OSS Stewards are so low that any project should already be doing that.

Some of the wording on Manufacturer vs Steward is still unclear, and it’s going to be improved in next drafts. It will have a good impact since Manufacturer will have to fix security issues in a relatively (fixed) short time. Shorter than what currently happens for CPU microcode, Greg says, so it’s going to change things. There was a question whether a hobbyist electronics maker will be covered, and Greg said yes, just like they are today with CE marking.

Manufacturers will have to be more careful picking software dependencies that can fix security issues (or fix them themselves), Greg says; they always had this problem until today though: they have just been ignoring it.

Lifetime for products is already defined in another EU law, today. For example, laptops have to be covered for 7 years after the last time it was sold.

What is a vulnerability is still being worked out. Standards are voluntary, and some won’t be finished (and ratified) before December 2027; Kees Cook is participating in those; it’s mostly risk management stuff.

A Rusty Odyssey: A Timeline of Rust in the DRM subsystem — Maira Canal

Maira worked on maintaining the Raspberry Pi DRM driver and wanted to provide an overview of what happened with Rust for Linux (RfL) over the past years, taking a step back and reviewing what happened, with a focus on GPU drivers specifically.

Timeline

The first RfL RFC happened in the beginning of 2021. It took almost two years for minimal support to be released in Linux 6.1 (2023). From this point on , things moved quite fast.

The first part, Maira says is the “Asahi Era”. The blog post Tales of M1 GPU happened in 2022, even before Rust was merged in mainline. It makes it even more fascinating, Maira says. Apple AGX is a reversed-engineered driver for Apple M1 and M2 GPUs, across a couple of firmware revisions. Asahi Lina had to write all the Rust abstractions for DRM. The RFC for the driver abstractions was sent in March 2023, and it was optimistic that it would get upstream for 6.5, then 6.6 for the driver. Unfortunately it’s not upstream yet.

Then, some pushback happened in the “DRM scheduler pushback”; back then the community hadn’t reached consensus on how much C changes could be done to make safe abstractions easier. After this event, the Rust conversation disappeared from the DRM mailing lists. The DRM scheduler patches tried to fix lifetime issues of C objects, in order to be able to encode them into Rust types. Lifetimes include who owns, who frees an object, how long a pointer is valid, can it alias or mutate, or is it thread-safe. Those are then translated to Rust types like Drop, PhantomData, NonNull, typestates, Send/Sync. The DRM scheduler works (most of the time) Maira says, and even had rules for object lifetimes, but those were not clearly documented. At this time, consensus wasn’t reached. Later, different people joined the Rust for Linux project, and tried to reach consensus or find different approaches.

This started the “Nova Era”; nova is a driver for latest generation of NVIDIA GPUs (that use GSP firmware), as a successor of nouveau; nova’s stub driver has been merged in mainline, but it’s still in development. RVKMS is a port of the vkms (Virtual KMS) driver to Rust, but it’s not upstream yet. For kms, Asahi wrote the code in C; Lyude Paul had to write the KMS abstractions from scratch. The Nova project is designed to grow with upstream and the community, instead of being merged everything at once. This reduces the number of rebases they have to do for example, and it grows the community.

In the Current Era, “2025 will be the year of Rust GPU drivers”, Daniel Almeida said. Both Daniel and Alice Ryhl announced Tyr, a rust-based DRM driver. Its stub driver is queued for 6.18. More and more functionality is being added to Tyr and nova. There are current efforts to improve the DRM scheduler with documentation and correction of Undefined Behaviour and leaks; Fairness is being added. However, a new scheduler is being developed in Rust. The community is converging with a new drm-rust tree git repository on top of the drm one.

Why does Rust seem so perfect for GPU drivers

First, it’s the usual Rust advantages, which the audience should already be familiar with, Maira says. GPUs are highly concurrent, so ownership and lifetime management is helped by the language.

GPU architecture is also evolving: many GPUs have dedicated coprocessors to handle in firmware tasks that used to be done in the drivers. NVIDIA has the GSP, Apple has the ASC running RTKit, Arm Mali has the CSF and AMDGPU has at least 3 coprocessors.

And this usually means unstable firmware APIs. Firmwares are usually proprietary, and the vendors can just bundle them with drivers on proprietary OSes. So this poses issues for OSS developers: how to deal with unstable APIs? In C, this leads to lots of duplications, with many different struct per versions. It’s possible to use macros to help, but this is not easy to maintain, or read.

In Rust, you can use procedural macros to add conditions to fields that depend firmware versions, and let the compiler and compile-time macro generate the different struct versions. Maira showed an example of code, which is simple to read with conditions, and its corresponding code expanded with rust-analyzer.

Why did Rust succeed in DRM

DRM Maintainers were willing to bet on the success of Rust. And this subsystem uses the Group maintainership model: multiple people are “committers” to repository, and this reduces the load on maintainers, because it is spread around between committers and maintainers. It helps this process.

The idea of merging sizeable stub drivers let the driver community grow, reducing the delta between upstream and development branches.

To conclude, Maira asks: is Rust the new standard in DRM? Probably, at least for new drivers.

In the audience, someone asked: was the Asahi driver blocked to be merged because of the DRM Scheduler? Maira said she could not answer for them, but that it was at least the last DRM Rust patch that was sent in 2023. Why not directly rewrite it in Rust at the time instead of using C bindings? It was not clear at the time whether the DRM maintainers would accept a different, Rust scheduler, and the situation have changed. In the audience, Alice says that the currently-being-rewritten Rust DRM scheduler is not really a replacement for the C DRM scheduler: it won’t support CPU scheduling, only on GPUs, so it serves a different use case. Daniel Almeida added that any feature added in Rust can also have C bindings in order to have C users, so it’s not a one-way thing.

Continue reading the afternoon live blog.

0 Comments